Moralizing #Privacy & #Security Victim Blaming in the iCloud #Hack

When JP Morgan got hacked, it was amazing how many people cried: “What sort of idiot would put their most personal financial information on the internet?” and “If someone accesses their bank account on their phone they’re just asking for it to get published online.” Wait a second. Absolutely no one did that. (OK, maybe some luddites did, but let’s call that out of scope.) This iCloud hack has come to an intersection of two things I think about a lot. I’m the father of two daughters. Two daughters that are both digital natives. Of course, being really immersed in these security and privacy issues I’ve told them what to avoid so much that they both give me major eye roll when I launch into the speech in response to things like this. But not everyone lives with someone like me. Not everyone even knows someone like me. Most people do not. And that shouldn’t matter.

What crystallized for me in this drama about the iCloud hack is the parallel between the language being used about these women who have had their (very) personal data exposed and the language people use when they fall into victim blaming in sexual assaults. Let me stop right there. People would perhaps rightly choose to react badly to my comparing digital security and sexual assault. I’m not trying to hold the two up and equate them. Though it is interesting that some people have called this a “sex crime”. Clearly, sexual assault and hacking live in different moral spheres. But the language people are using to describe their thoughts about this hack in particular have a big “blame the victim” tone to them. The intimate nature of this data is clearly part of it. The fact that people feel entitled to trespass on the female body, especially the celebrity female body, also has to do with it. There is likely a dash of defensiveness built in as well – people know they are not always angels with their own iPhones and clearly aren’t sure about what that phone may do with their more sensitive moments. They may not take nude photos of themselves, but would their digital trail lead to that “innocent” drink with a past lover?

The key concept here to me is that we cannot expect better security when we’re going to blame the victim of a data breach. The fact that a vulnerability was exposed just before and patched just after this happened is a much more fruitful place to look for root cause. But root cause isn’t blame. Being a celebrity doesn’t grant you magical technology awareness. For those thinking “they have people to do that,” I ask you to imagine handing your phone, which you consider one of your most private spaces in what would be a very public life, over to someone else on a regular basis. These celebrities are likely regular users just like everyone else – maybe even more defensive of a small island of “mine” in a sea of “public space” that they have in their lives. Then you need only realize how few people understand the privacy and security implications of the rat’s maze of settings on the average smart phone. Most people don’t know what half the little checkboxes and sliders mean, much less know the exact right combination of settings that will protect them from hackers and cloud vulnerabilities. If we didn’t think the people using online banking were at fault, why do we think these celebrities are?

Is this security fatalism? If the consumers have no chance to get it right, what can they do? Clearly, there’s the “eat your vegetables” answers: turn on the best security, use better passwords, don’t trust the man. That’s the path that can lead to victim blaming, but there is truth in the idea that they could do more to take charge. But if the vulnerability was built in, then even their best effort wouldn’t help them. The moralistic answer (“don’t take those sorts of pictures because they are bad”) is absolutely victim blaming. It’s a free country. I like secret option number three: collectively demand better built in security and privacy that works well and is clear. Maybe that’s just as unrealistic as the “eat your veggies” options. But consumer demand has driven safety in cars, water, air, and a lot more. Maybe a few celebrities is precisely what we needed to get that sort of collective stand for better security going.

My thoughts from the White House OSTP “Big Data” RFI

(1) What are the public policy implications of the collection, storage, analysis, and use of big data? For example, do the current U.S. policy framework and privacy proposals for protecting consumer privacy and government use of data adequately address issues raised by big data analytics?

Current policy is not sufficient to address big data issues. There are many good proposals, but the speed with which they are taking shape means they are being lapped by the constantly shifting realities of the technology they are meant to shape. NSTIC (National Strategy for Trusted Identities in Cyberspace http://is.gd/JOrjCw) has been an excellent example of this. That digital identity is core to properly addressing big data should be obvious. How can we hope to protect privacy if we cannot identify the proper steward of data? How can we identify data stewards if the people who ought to be identified have no consistent digital identity? The very founding notion of NSTIC, trusted identities, begs the question of if we are prepared to approach empowering people via assigning responsibility. If we do not have identities that can be trusted, then we don’t even have one of the basic building blocks that would be required to approach big data as a whole.

That said, the implications that big data has are too large to ignore. In “The Social, Cultural & Ethical Dimensions of Big Data” (http://is.gd/EGe7tD), Tim Hwang raised the notion that data is the basic element in (digital) understanding; and further that understanding can lead to influence. This is the big data formulation of the notion that knowledge leads to rights, and rights lead to power – the well tested idea of Michel Foucault. In the next century, the power of influence will go to those who have understanding culled from big data. This will be influence over elections, economies, social movements and the currency that will drive them all – attention. People create big data in what they do, but they also absorb huge amounts of data in doing so. The data that can win attention will win arguments. The data that gets seen will influence all choices. We see this on the internet today as people are most influenced not by what they read which is correct but rather what they see that holds their attention. And gaining that influence seems to be playing out as a winner takes all game. With nothing short of the ethical functioning of every aspect of human life on the line, big data policy implications cannot be understated.

(2) What types of uses of big data could measurably improve outcomes or productivity with further government action, funding, or research? What types of uses of big data raise the most public policy concerns? Are there specific sectors or types of uses that should receive more government and/or public attention?

The amount of data involved in some big data analysis and the startlingly complex statistical and mathematical methods used to power them give an air of fairness. After all, if it’s all real data powered by cold math, what influence could there be hiding in the conclusions? It is when big data is used to portray something as inherently fair, even just, that we need to be the most concerned. Any use of big data that is meant to make things “more fair” or “evenly balanced” should immediately provoke suspicion and incredulity. Just a small survey of current big data use shows this to be true. Corrine Yo from the Leadership Conference gave excellent examples of how surveillance is unevenly distributed in minority communities, driven by big data analysis of crime. Clay Shirky showed how even a small issue like assigning classes to students can be made to appear fair through statistics applied to big data when there are clearly human fingers tipping the scales. There are going to be human decisions and human prejudices built into every big data system for the foreseeable future. Policy needs to dictate what claims to fairness and justice can be made and outline how enforcement and transparency must be applied in order to be worthy of those claims.

The best way for government to speed the nation to ethical big data will be to fund things that will give us the building blocks of that system. In no particular order a non-exhaustive list of these ethical building blocks will be trusted identity, well defined ownership criteria of data generated by an individual directly and indirectly, simple and universal terms for consent to allow use of data, strong legal frameworks that protect data on behalf of citizens (even and especially from the government itself), and principles to guide the maintenance of this data over time, including addressing issues of human lifecycles (e.g. what happens to data about a person once they are dead?). There are many current proposals that apply here, e.g. NSTIC as mentioned above. All of these efforts could use more funding and focus.

(3) What technological trends or key technologies will affect the collection, storage, analysis and use of big data? Are there particularly promising technologies or new practices for safeguarding privacy while enabling effective uses of big data?

Encryption is often introduced as an effective means to protect rights in the use of data. While encryption will doubtless be part of any complete solution, today’s actual encryption is used too little and when used often presents too small a barrier for the computing power available to the determined. Improvements in encryption, such as quantum approaches, will surely be a boon to enforcement of any complete policy. The continued adoption of multi-factor authentication, now used in many consumer services, will also be an enabler to the type of strong identity controls that will be needed for a cooperative control framework between citizens and the multitude of entities that will want to use data about the citizens. As machines become better at dealing with fuzzy logic and processing natural language, there will be more opportunities to automate interactions between big data analysis and the subjects of that analysis. When machines can decide when they need to ask for permission and know how to both formulate and read responses to those questions in ways that favor the human mode of communication, there will be both more chances for meaningful decisions on the part of citizens and more easily understood records of those choices for later analysis and forensics.

(4) How should the policy frameworks or regulations for handling big data differ between the government and the private sector? Please be specific as to the type of entity and type of use (e.g., law enforcement, government services, commercial, academic research, etc.).

Policies governing interaction of government and private sector is one area where much of what is defined today can be reused. Conversely, where the system is abused today big data will multiply opportunities for abuse. For example, law enforcement data, big or not, should always require checks and balances of the judicial process. However, there is likely room for novel approaches where large sets of anonymized data produced from law enforcement could be made available to the private and educational sectors en masse as long as that larger availability is subject to some judicial check on behalf of the people in place of any individual citizen. Of course, this assumes a clear understanding of things being “anonymized” – one of many technical concepts that will need to be embedded in the jurisprudence to be applied in these circumstances. There are cracks in the current framework, though. This can allow data normally protected by regulations like HIPPA to seep out via business partners and other clearing house services that are given data for legitimate purposes but not regulated. All instances of data use at any scale must be brought under a clear and consistent policy framework if there is any hope to forge an ethical use of big data.

Is the ID ecosystem #NSTIC wants too much risk for an IdP?

I’m gearing up to go to the NSTIC convened steering group meeting in Chicago next week. Naturally, my inner nerd has me reviewing the founding documents, re-reading the NSTIC docs, and combing through the by laws that have been proposed (all fo which can be found here). I am also recalling all the conversations where NSTIC has come up. One trend emerges. Many people say they think the NSTIC identity provider responsibilities are too much risk for anyone to take on. With identity breaches so common now that only targets with star power make the news, there does seem to be some logic to that. If your firm was in the business of supplying government approved identities and you got hacked then you are in even hotter water, right?

The more it rolls around in my head, the more I think the answer is: not really. Let’s think about the types of organization that would get into this line of work. One that is often cited is a mobile phone provider. Another is a website with many members. One thing these two classes of organization – and most others I hear mentioned – have in common is that they are already taking on the risk of managing and owning identities for people. They already have the burden of the consequences in the case of a breach. Would having the government seal of approval make that any less or more risky? It’s hard to say at this stage, but I’m guessing not. It could lessen the impact in one sense because some of the “blame” would rub off on the certifying entity. “Yes, we got hacked – but we were totally up to the obviously flawed standard!” If people are using those credentials in many more places since NSTIC’s ID Ecosystem ushers in this era of interoperability (cue acoustic guitar playing kumbaya), then you could say the responsibility does increase because each breach is more damage. But the flipside of that is there will be more people watching, and part of what this should do is put in place better mechanisms for users to respond to that sort of thing. I hope this will not rely on users having to see some news about the breach and change a password as we see today.

This reminds me of conversations I have with clients and prospects about single sign on in the enterprise. An analogy, in the form of a question, a co-worker came up with is a good conversation piece: would you rather have a house with many poorly locked doors or one really strongly locked door? I like it because it does capture the spirit of the issues. Getting in one of the poorly locked doors may actually get you access to one of the more secure areas of the house behind one of the better locked doors because once you’re through one you may be able to more easily move around from the inside of the house. Some argue that with many doors there’s more work for the attacker. But the problem is that also means it’s more work for the user. They may end up just leaving all the doors unlocked rather than having to carry around that heavy keychain with all those keys and remember which is which. If they had only one door, they may even be willing to carry around two keys for that one door. And the user understands better that they are risking everything by not locking that one door versus having to train them that one of the ten doors they have to deal with is more important than the others. All of this is meant to say: having lots of passwords is really just a form of security through obscurity, and the one who you end up forcing to deal with that obscurity is the user. And we’ve seen how well they choose to deal with it. So it seems to me that less is more in this case. Less doors will mean more security. Mostly because the users will be more likely to participate.

SAML joins the IT zombie legions?

I’ve had the privilege to witness many IT funerals. By my reckoning, Mainframes, CORBA, PKI, AS400, NIS+, and countless others are all dead according to the experts. Of course, that means nearly every customer I talk with is overrun with zombies. Because these technologies are still very much alive, or at least undead, in their infrastructures. They are spending tons of money on them. They are maintaining specialized staff to deal with them. And, most importantly of all, they are still running revenue generating platforms on them. Now some of the the venerable folks speaking at CIS2012 want to count SAML among the undead. It’s a sign of the ever increasing pace of IT. SAML, if it’s dead, will be leaving a very handsome corpse. But I think it’s safe to say SAML will be with us for a very long time to come. This meme feels like another flashpoint in the tensions between thought leaders like the list of folks discussing this on twitter (myself included) and the practitioners who have to answer to all the folks in suits who just want to see their needs met. I try to split the difference. It seems to me that the only thing that makes something dead is when people are actively trying to get away from it because they are losing money on it. SAML is nowhere near that. But if dead is defined as not being a destination but rather a landmark in a receding landscape, then maybe it has died. But it’s chasing after us hungry for our budgets and offering being impervious to pain as a trade for that funding, which sounds like some kind of zombie to me. Using SAML will make you impervious to the pain of being so far ahead of the curve there is no good vendor support, impervious to the pain that there are not enough people with talent in your platform that you can’t get things done – or have to pay so much to get things done you may as well not do them, and impervious to the pain of being unable to get what you need done because there aren’t enough working examples of how to do it. Based on what i hear from practitioners, they may like being impervious to all those pains. So the IT zombie legions grow…

IAM Liaisons: Multiple Identities in the Days of Cloud & LARPing

I’m in the car listening to an NPR piece about LARPing while driving between meetings. Something they say catches my ear. It seems LARPers (is that even a word?) have an impulse to create immersive identities aside from their own because they want more degrees of freedom to experience the world. In case you’re in the dark about what LARPing is (like I was), it’s Live Action Role Playing – dressing up as characters and acting out stories in real world settings as opposed to scripted controlled settings. It’s clear how Maslow’s Hierarchy of Needs applies here. You won’t find a lot of LARPing in war torn areas, or communities suffering from rampant poverty. But does a group of people having enough energy to spare in their identity establishment to want to spawn new identities to live with imply an Identity Hierarchy of Needs? Could it be that when you have enough security in the identity you need, you seek out ways to make the identity that you want more real than just going to the gym to get better abs?

Maslow’s hierarchy of needs is one of my favorite conceptual frameworks. Not only is it extremely powerful in its home context of psychology, not only is it useful in framing the psychological impacts of many things from other contexts (political, philosophical, economic), it’s also useful as a general skeleton for understanding other relationships. My marketing team recently applied it to Quest’s IAM portfolio. They framed our solutions as layers of technology that could get your house in order to achieve the far out goals of total governance and policy based access management, which they identified as Maslow’s highest order. But I’m thinking about this more in terms of pure, individual identity. Of course the technology tracks alongside that in many ways. The LARPing is what got me thinking, but the other parallels become immediately clear. How many people have multiple social networking accounts? A page for business tied to a Twitter account, a Facebook presence as a personal playground, and a LinkedIn page for a resume are standard fair for many folks in the high tech biz, and beyond. Again, it’s not likely that a blue collar factory worker would have all these identities to express themselves. Like Maslow’s original idea, there is a notion of needing the energy to spare and the right incentives to take the time. There is also an interesting socio-political dimension to this I’ll leave as an exercise to the reader.

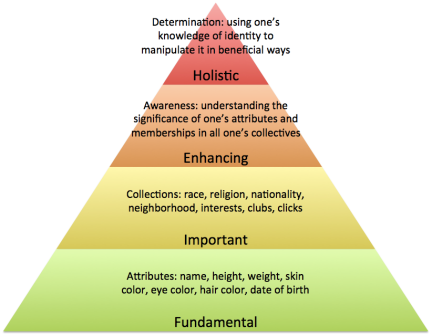

The first question is clear: what would an identity hierarchy of needs look like? If one googles “hierarchy of needs” AND “Identity management”, there are a dizzying number of hits. So it’s not like this hasn’t been explored before. Some good ones come from Dave Shackleford who applies the hierarchy to security and R “Ray” Wang who applies it more widely to making choices about technology decisions. But these only treat IAM as an element of their whole. I want to apply it to identity by itself.

One thing I’ll borrow from Dave’s structure is the four categories he uses (from the bottom up): fundamental, important, enhancing, holistic. I won’t pretend I’m going to get this right at this point. I would love to get feedback on how to make this better. But I’ll take a stab at making this work.  The assumptions here are that there is no identity without attributes. What does it mean to say “I am Jonathan” if it’s not to assert that this thing “I” has an attribute labeled “name” that is given the value “Jonathan”? And this is more than a technology thing. All notions of identity boil down to attributes and collections of attributes. The next layer deals with taking identities that are collections of attributes and giving them places in groupings. Call them roles, groups, social clubs, parishes, or whatever you like. Membership in collections help define us. The next two layers were harder to work out, at first. But then I realized it was about the turn inward. Much like Maslow’s higher level are where you work on your inner self, our identity hierarchy is about understanding and controlling our attributes and participation in collectives. First we need to realize what those are. Then we need to use this knowledge to gain the power to determine them.

The assumptions here are that there is no identity without attributes. What does it mean to say “I am Jonathan” if it’s not to assert that this thing “I” has an attribute labeled “name” that is given the value “Jonathan”? And this is more than a technology thing. All notions of identity boil down to attributes and collections of attributes. The next layer deals with taking identities that are collections of attributes and giving them places in groupings. Call them roles, groups, social clubs, parishes, or whatever you like. Membership in collections help define us. The next two layers were harder to work out, at first. But then I realized it was about the turn inward. Much like Maslow’s higher level are where you work on your inner self, our identity hierarchy is about understanding and controlling our attributes and participation in collectives. First we need to realize what those are. Then we need to use this knowledge to gain the power to determine them.

Self determination is actually the perfect phrase to tie together all these thoughts. What was it about the LARPers that triggered all these thoughts? It was that they had decided to actively take control of their identities to the point of altering them, even bifurcating them. That may make it sound like I’m making them out to be the masters of the universe (and not just because some do dress up as He-Man characters). But just like some folks can live in a psychological state pretty high up on the Maslow hierarchy without putting in much effort to achieve the first few levels, the same can be true of folks in the identity hierarchy, I’d think. If you have your most important attributes defined for you by default, get assigned reasonable collectives to belong to, and even have a decent awareness of this without challenging it, then you may grow up to be the special kind of geek that likes to LARP. That pleasure derived from splitting your personality is likely something that’s largely implicit – you don’t need to understand it too deeply.

Of course, if this all feels too geeky to apply to regular folks, I can turn to what may be the oldest form of this identity splitting. The “liaisons” in the title came from a notion that maybe folks carrying out complicated affairs of the heart were trying to bifurcate their own identities in a bid to push self determination before there was any better outlet. No excuse for serial adultery, but it gives a new prism through which to view the characters in Dangerous Liaisons, perhaps. How many times in novels does the main motivation for these affairs come down to a desire for drama, romance, or a cure for bourgeois boredom? How many times on The People’s Court? The point is that just like people who have climbed to the top of Maslow’s Hierarchy may not have done so using morally good means and may not use their perch to better the world, people who are experimenting in self determination to the point of maintaining multiple identities in their lives may not be doing it for the most upstanding of reasons, either.

And how does this all relate back to the technology of IAM? Maybe it doesn’t very concretely. I’d be OK with that. It may if you consider that there are many people out there trying to hand their users self determination through IAM self service without first having a grip on what attributes make up an identity. How can you expect them to determine their fate if they have no idea what their basic makeup is? We expect users to take the reigns of managing their access rights, certifying the rights of others, and performing complicated IAM tasks. But if they ask “Why is this person in this group?” we have no good answers. Then we’re surprised at the result. So maybe this applies very well. Finally, what does this have to do with the cloud? Clearly, cloud means more identities. Many times they are created by the business seeking agility and doing things with almost no touch by IT. If the cloud providers give them a better sense of identity than you do, then that’s where they will feel more able to determine their own fate. Some may say “But that’s not fair. That cloud provider only needs to deal with a small bit of that person’s identity and so it’s easier for them!” Life is not fair. But if you establish a strong sense of what an identity is and how it belongs in collectives, gave users ways to understand that, and then enabled them to control it, you would be far ahead of any cloud provider. But it all starts with simply understanding how to ask the right questions.

I expect (and hope) to raise more questions with all of this than to answer them. This is all a very volatile bed of thoughts at the moment. I’m hoping others may have things to say to help me figure this all out. As always, I expect I’ll learn the most by talking to people about it.

“Security” is still seen as reactive controls & ignores IAM

There was an excellent article at Dark Reading the other day about data leaks focusing on insider threats. It did all the right things by pointing out “insiders have access to critical company information, and there are dozens of ways for them to steal it” and “these attacks can have significant impact” even though “insider threats represent only a fraction of all attacks–just 4%, according to Verizon’s 2012 Data Breach Investigations Report.” The article goes on to discuss how you can use gateways, DLP for at rest and in flight data, behavioral anomaly detection, and a few other technologies in a “layered approach using security controls at the network, host, and human levels.” I agree with every word.

Yet, there is one aspect of the controls that somehow escapes mention – letting a potentially powerful ally in this fight off the hook from any action. There is not one mention of proactive controls inside the applications and platforms that can be placed there by IAM. A great deal of insider access is inappropriate. Either it’s been accrued over time or granted as part of a lazy “make them look like that other person” approach to managing entitlements. And app-dev teams build their own version of security into each and every little application they pump out. They repeat mistakes, build silos, and fail to consume common data or correctly reflect corporate policies. If these problems with entitlement management and policy enforcement could be fixed at the application level, the threats any insider could pose would be proactively reduced by cutting off access to data they might try to steal in the first place. It’s even possible to design a system where the behavioral anomaly detection systems could be consulted before even handing data over to a user when some thresholds are breached during a transaction – in essence, catching the potential thief red handed.

Why do they get let off the hook? Because it’s easier to build walls, post guards, and gather intelligence than it is to climb right inside of the applications and business processes to fix the root causes. It’s easier to move the levers you have direct control over in IT rather than sit with the business and have the value conversation to make them change things in the business. It’s cheaper now to do the perimeter changes, regardless of the payoff – or costs – later. Again, this is not to indict the content of the article. It was absolutely correct about how people can and very likely will choose to address these threats. But I think every knows there are other ways that don’t get discussed as much because they are harder. In his XKCD comic entitled “The General Problem,” Randall Munroe says it best: “I find that when someone’s taking time to do something right in the present, they’re a perfectionist with no ability to prioritize, whereas when someone took time to do something right in the past, they’re a master artisan of great foresight.” I think what we need right now are some master artisans who are willing to take the heat today for better security tomorrow.

The IP & Privacy Link – @Harkaway at #GartnerIAM

As the new season of conferences kicks into gear, I start to have thoughts too big to fit into tweets again. I once again had the pleasure of making it to London for the EMEA Gartner IAM Summit. There was a big crowd this year, and the best part, as it always is, was the conversations in hallways and at bars surrounding the official agenda. It’s always good to get together with lots of like minded folks and talk shop.

On stage, the conversations were intense as always. @IdentityWoman took the stage and educated a very curious audience about what identity can mean in this brave new mobile world. And there was an interesting case made that “people will figure out that authentication is a vestigial organ” by @bobblakley. But the comment that caught my imagination most of all was by author and raconteur Nick Harkaway, aka @Harkaway.

He links IP (Intellectual Property for clarity since there are a few “IP” thingys floating around now) and privacy in a way that never occurred to me before. @Harkaway says “both [are] a sense of ownership about data you create even after you’ve put it out into the world.” @IdentityWoman spoke at length about how our phones leave trails of data we want to control for privacy and perhaps profit reasons, and @bobblakley even proposed how to use that sort of data for authentication. At the core of both of those ideas is a sense of ownership. If it’s “the data is mine and I want to keep it private” or “the data is mine and I want the right to sell it”, it’s all about starting from the data being something that belongs to you.

I typically react with skepticism to IP but with very open arms to privacy. So to suddenly have them linked in this way was quite a dissonance. But what difference is it to say that I write this work of fiction and expect it to be mine even after it’s complete or I create this mass of geo-data by moving around with my phone and expect it to be mine even after I’m in bed at night? “But it’s the carriers responsibility to actually generate and maintain that data!” OK. But if I write my work using Google Docs does that alter my IP rights? Does it matter perhaps that the novel is about something other than me? Does it matter that geo-data is not creative? (Of course, some geo-data is creative)

I don’t have all, or perhaps any, answers here. But I thought this notion was worthy of fleshing out and further sharing. What do you think? Are IP and privacy in some way intimately linked?

Apple’s iCloud IAM Challenges – Does Match Need ABAC?

I swear this is not just a hit grab. I know that’s what I think every time I see someone write about Apple. But the other day I was clearing off files from the family computer where we store all the music and videos and such because the disk space is getting tight. I’ve been holding off upgrading or getting more storage thinking that iCloud, Amazon Cloud Drive, or even the rumored gDrive may save me the trouble. So the research began. Most of it focused on features that are tangent to IAM. But Apple’s proposed “iTunes Match” got me thinking about how they would work out the kinks from an access standpoint in many use cases. If you don’t feel like reading about it, the sketch of what it will be is you have iTunes run a “match” on all the music you have you did *not* get from Apple and it will then allow you to have access to the copies Apple already has of those tracks on their servers at their high quality bit rate via iCould instead of having to upload them.

All the string matching levels of h3ll this old perl hacker thought of immediately aside, it became clear that they were going to use the existence of the file in your library as a token to access a copy of the same song in theirs. Now, my intent is to use this as a backup as well as a convenience. So maybe I’m not their prime focus. But a number of access questions became clear to me. What happens if I lose the local copy of a matched song? If I had it at one time does that establish a token or set some attribute on their end that ensures I can get it again? Since they have likely got a higher quality copy, do I have to pay them a difference? I had to do that with all the older songs I got from iTunes for the MP3 DRM free versions, why not this? Of course, if the lost local copy means that I can no longer have access to the iCloud copy, then this cannot act as a backup. So that would kill it for me.

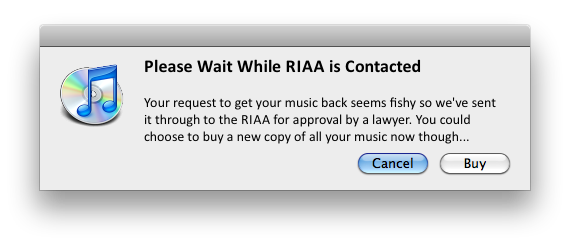

But these problems have bigger weight for Apple than users not choosing them for backup features. There is a legal elephant in the room. How can Apple be sure they are not getting the music industry to grant access to high quality, completely legit copies of tracks in exchange for the presence of tracks that were illegally downloaded? In an industry supported by people paying for software, I’m always shocked at how lonely I am when I say my entire music collection is legal – or, at least, as legal as it is to rip songs from CDs for about 40% of the bulk of it. It’s one thing for a cloud provider to say “here’s a disk, upload what you like. And over here in this legal clean room is a music player that could, if you want, play music that may be on your drive.” But Apple is drawing a direct connection between having a track and granting permissions to a completely different track. Then pile on a use case where some joker who has the worst collection of quadruple compressed tracks downloaded from Napster when he was 12 and pours coffee on his hard drive the day after iTunes Match gave him access to 256 Kbps version of all his favorite tunes.

If this were a corporate client I was talking to, I’d be talking about the right workflow and access certification to jump these hurdles. Can you picture the iTunes dialog box telling you that your music request is being approved? That would be very popular with end users…